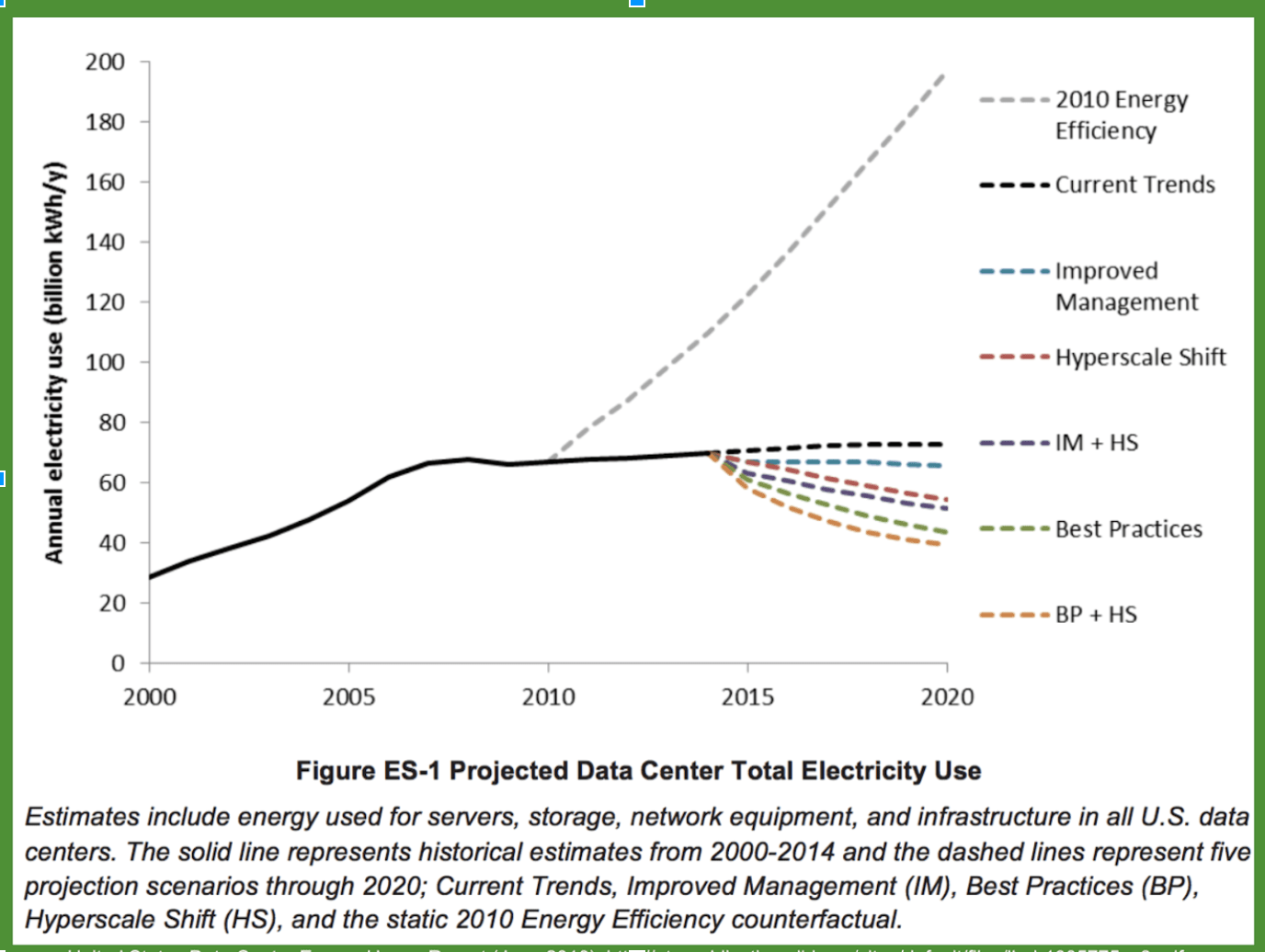

Between 2000 and 2010, global data center energy consumption more than doubled. Due to the rapidly increasing expansion of internet access around the world, scientists expected this rapid upwards trend to continue, with many predicting that energy usage rates would further increase, so that data enter energy consumption would triple from 2010 to 2020.

Yet, throughout the twenty-tens, despite a large increase in the number of servers, data center energy consumption has remained relatively constant with the 2008 level, only increasing about 4% per year. Why did energy usage stop growing in accordance with the increase in internet usage? The answer to this question helps explain the complex relationship between technology expansion, the environment, and the economy.

In 2008, Researchers from the Lawrence Berkeley National Laboratory conducted a study on the projection of global data center energy usage (see graph). They reported their findings to Congress in 2008, highlighting the dramatic trends from previous decades and predicting that continued technological growth would greatly affect global greenhouse gas emissions. Although it is not certain that this presentation had any direct affect on data center efficiency, after their presentation people around the world suddenly started to work towards improving the efficiency of data centers. Companies realized that if their data centers' energy consumption continued to grow as much as the initial trends predicted, their energy costs would soar, devastating both the environment and their profits.

Corporations with big, high-traffic data centers began devoting a large portion of their budgets towards their data centers' energy efficiencies. In fact, despite their size, as of 2014, "large corporation cloud server farms" account for less than 5% of data center global energy consumption and Greenpeace rated three major tech corporations---Google, Facebook, and Apple---the greenest tech companies in 2017. How are such expansive data centers so efficient? For one, all three have succeeded in powering their data centers entirely with renewable energy or renewable energy subsidies (Read their environmental reports here: Facebook, Google, Apple). Additionally, each of them have devoted time and money to studying how to make their specific data centers more efficient by researching and improving efforts such as water conservation, waste management, location, weather, and server power. They started building many of their new data centers in Nordic countries where the cooler air naturally cools throughout the year. Google is focusing on creating zero-waste-to-landfill data centers and Facebook has designed a data center that uses 80% less water. All of these efforts have both had incredible impacts on sustainable efforts and could save each company millions of dollars on electricity.

However, these large data centers make up a minuscule proportion of data centers worldwide. How did smaller, lower-budget data centers decrease their environmental impact? Well, fortunately, there are also many cost-effective options to improve data center efficiency.  Within the past couple decades, researchers have studied and designed enhanced cooling technologies and data center layouts that are vastly more energy efficient than previous models. Additionally, the invention and expansion of server virtualization, consolidation, and cloud computing have provided smaller data centers with the ability to become more efficient. The vast majority of companies that did not have the budget to build their data centers overseas or buy solar farms used these studies and technologies to improve their data center efficiency, contributing to the decrease in the rate of energy consumption growth. Even today, as technologies continue to improve, data center efficiency is only expected to raise at a marginal rate, despite an increasing number of servers.

Within the past couple decades, researchers have studied and designed enhanced cooling technologies and data center layouts that are vastly more energy efficient than previous models. Additionally, the invention and expansion of server virtualization, consolidation, and cloud computing have provided smaller data centers with the ability to become more efficient. The vast majority of companies that did not have the budget to build their data centers overseas or buy solar farms used these studies and technologies to improve their data center efficiency, contributing to the decrease in the rate of energy consumption growth. Even today, as technologies continue to improve, data center efficiency is only expected to raise at a marginal rate, despite an increasing number of servers.